A ferroelectric–memristor memory for both training and inference

An innovative study published in Nature Electronics, September 2025, introduces a unified memory stack that functions as a memristor and a ferroelectric capacitor. This on-chip learning solution performs competitively with floating-point-precision software models across several benchmarks, without batching. A huge step forward in energy-efficient and non-destructive edge AI systems.

This study was conducted through a national collaboration including Michele Martemucci, François Rummens, Yannick Malot, Tifenn Hirtzlin, Olivier Guille, Simon Martin, Catherine Carabasse, Laurent Grenouillet and Elisa Vianello from Université Grenoble Alpes / CEA; Damien Querlioz from Université Paris-Saclay / C2N; and Adrien F. Vincent and Sylvain Saïghi from Université de Bordeaux, IMS.

The project is funded by the Anticipate project between CEA-Leti and the IMS Laboratory.

Detail:

https://doi.org/10.1038/s41928-025-01454-7

Martemucci, M., Rummens, F., Malot, Y. et al. A ferroelectric–memristor memory for both training and inference. Nat Electron 8, 921–933 (2025).

Abstract:

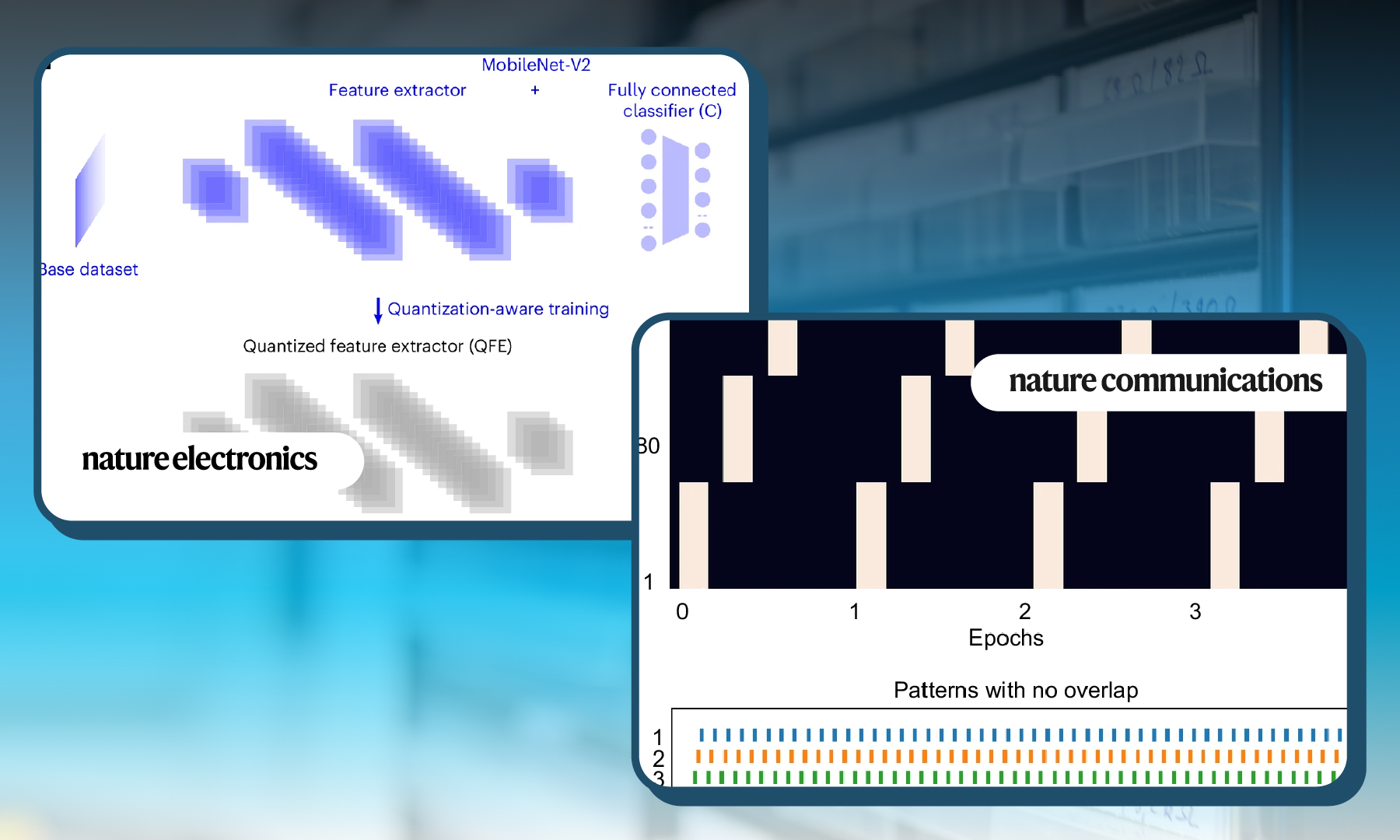

Developing artificial intelligence systems that are capable of learning at the edge of a network requires both energy-efficient inference and learning. However, current memory technology cannot provide the necessary combination of high endurance, low programming energy and non-destructive read processes. Here we report a unified memory stack that functions as a memristor as well as a ferroelectric capacitor. Memristors are ideal for inference but have limited endurance and high programming energy; ferroelectric capacitors are ideal for learning, but their destructive read process makes them unsuitable for inference. Our memory stack uses a silicon-doped hafnium oxide and titanium scavenging layer that are integrated into the back end of line of a complementary metal–oxide–semiconductor process. With this approach, we fabricate an 18,432-device hybrid array (consisting of 16,384 ferroelectric capacitors and 2,048 memristors) with on-chip complementary metal–oxide–semiconductor periphery circuits. Each weight is associated with an analogue value stored as conductance levels in the memristors and a high-precision hidden value stored as a signed integer in the ferroelectric capacitors. Weight transfers between the different memory technologies occur without a formal digital-to-analogue converter. We use the array to validate an on-chip learning solution that, without batching, performs competitively with floating-point-precision software models across several benchmarks.

A frugal Spiking Neural Network for unsupervised multivariate temporal pattern classification and multichannel spike sorting

An innovative study published in Nature Communications, October 2025, introduces a very frugal Spiking Neural Network (SNN) that can learn and classify complex patterns in continuous data streams in a fully unsupervised manner. This approach could be suitable for future embedding into ultra-low power neuromorphic hardware platforms, highlighting the importance of biomimetic neural networks.

This study was conducted through an international collaboration including Sai Deepesh Pokala, Marie Bernert, and Blaise Yvert from Université Grenoble Alpes / INSERM (France); Takuya Nanami and Takashi Kohno from The University of Tokyo / Institute of Industrial Science (Japan); and Timothée Levi from Université de Bordeaux, IMS (France).

The project is funded by ANR Brainnet, ANR Irvin and UBLIA Biomeg.

Detail:

https://doi.org/10.1038/s41467-025-64231-2

Pokala, S.D., Bernert, M., Nanami, T. et al. A frugal Spiking Neural Network for unsupervised multivariate temporal pattern classification and multichannel spike sorting. Nat Commun 16, 9218 (2025).

Abstract:

Advanced large-scale neural interfaces call for efficient algorithms to automatically process and optimally exploit the richness of their heavy continuous flow of data. In this context, we introduce here a very frugal generic single-layer Spiking Neural Network (SNN) for fully unsupervised identification and classification of multivariate temporal patterns in continuous data streams. This approach is first validated on simulated multivariate data, Mel Cepstral representations of speech sounds, and multichannel multiunit neural recordings. Then, this very simple SNN was found to be effective at classifying action potentials in a fully unsupervised and online-compatible mode on simulated and real spike sorting datasets. These results pave the way for highly frugal SNN architectures for automatic unsupervised real-time pattern recognition in high-dimensional neural data recordings, which could be suitable for future embedding into ultra-low power hardware platforms, such as active neural implants.